Refocusing a photograh taken with Intel Realsense 3D cameras

- 0 Collaborators

A photograph taken with an Intel RealSense camera contains more than just the color of the scene, it also has the depth of it. This means that you can do extra things with it, like changing its focus after the photo was taken for example. ...learn more

Project status: Published/In Market

RealSense™, Internet of Things

Intel Technologies

Other

Overview / Usage

There is an interesting mode in the Google Camera app, called Lens Blur, that allows you to refocus a picture after it was taken, basically simulating what a light-field camera is able to do but using just a regular smartphone.

To do this, the app uses an array of computer vision techniques such as Structure-from-Motion(SfM), and Multi-View-Stereo(MVS) to create a depth map of the scene. Having this entire pipeline running on a smartphone is remarkable to say the least. You can read more about how this is done here.

Once the depth map and the photo are acquired, the user can select the focus location, and the desired depth of field. A thin lens model is then used to simulate a real lens matching the desired focus location and depth of field, generating a new image.

In theory, any photograph with its corresponding depth map could be used with this technique. To test this, I decided to use an Intel RealSense F200 camera, and it worked.

Methodology / Approach

I first took a color and depth image from the Realsense camera. Then, projected the depth image into the color camera:

Color photograph from RealSense F200

Depth image projected into color frame of reference from RealSense F200

You can watch my tutorial about RealSense to see how you can do this.

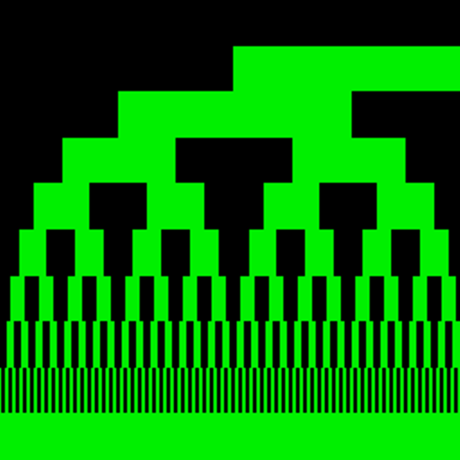

The next step was to encode the depth image into a format that Google Camera understands, so I followed the encoding instructions from their documentation. The RangeLinear encoding of the previous depth map looks something like this:

The next step is just to embed the encoded image into the metadata of the original color image, and copy the final photo into the smartphone gallery. After that, I was able to just open the app, select the image, and refocus it!.

Technologies Used

For this project I used an Intel RealSense F200 camera, a Google camera app mode called Lens Blur, and Adobe's Extensible Metadata Platform (XMP) for writing the metadata to the photograph. I wrote the depth encoding based on this depthmap metadata definition

Other links

- Adobe XMP

- Accessing data from the Intel RealSense F200

- Intel RealSense F200 with Google Camera's Lens Blur

- Interfacing Intel RealSense 3D camera with Google Camera’s Lens Blur

- Exploiting parallax in panoramic capture to construct light fields

- Lens Blur in New Google Camera App

-

Intel® RealSense™ SDK (https://github.com/IntelRealSense/librealsense)