Automatic Image-Caption Generator

- 0 Collaborators

This project will guide you to create a neural network architecture to automatically generate captions from images. So the main goal here is to put CNN-RNN together to create an automatic image captioning model that takes in an image as input and outputs a sequence of text that describes the image. ...learn more

Project status: Published/In Market

Groups

DeepLearning,

Artificial Intelligence India,

Student Developers for AI

Intel Technologies

AI DevCloud / Xeon

Overview / Usage

In this project, we will take a look at an interesting multi modal topic where we will combine both image and text processing to build a useful Deep Learning application, aka Image Captioning. Image Captioning refers to the process of generating textual description from an image – based on the objects and actions in the image.

So the main goal here is to put CNN-RNN together to create an automatic image captioning model that takes in an image as input and outputs a sequence of text that describes the image.

But why caption the images?

This is because image captions are used in a variety of applications. For example:

- It can be used to describe images to people who are blind or have low vision and who rely on sounds and texts to describe a scene.

- In web development, it’s good practice to provide a description for any image that appears on the page so that an image can be read or heard as opposed to just seen. This makes web content accessible.

- It can be used to describe video in real time.

A captioning model relies on two main components, a CNN and an RNN. Captioning is all about merging the two to combine their most powerful attributes i.e.

- CNNs excel at preserving spatial information and images, and

- RNNs work well with any kind of sequential data, such as generating a sequence of words. So by merging the two, you can get a model that can find patterns and images, and then use that information to help generate a description of those images.

Methodology / Approach

The task of image captioning can be divided into two modules logically – one is an image based model – which extracts the features and nuances out of our image, and the other is a language based model – which translates the features and objects given by our image based model to a natural sentence.

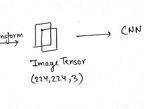

For our image based model (viz encoder) – we usually rely on a Convolutional Neural Network model. And for our language based model (viz decoder) – we rely on a Recurrent Neural Network. We'll feed an image into a CNN. We’ll be using a pre-trained network like VGG16 or Resnet. Since we want a set of features that represents the spatial content in the image, we’re going to remove the final fully connected layer that classifies the image and look at earlier layer that processes the spatial information in the image.

So now CNN acts as a feature extractor that compresses the information in the original image into a smaller representation. Since it encodes the content of the image into a smaller feature vector hence,this CNN is often called the encoder.

When we process this feature vector and use it as an initial input to the following RNN, then it would be called decoder because RNN would decode the process feature vector and turn it into natural language.

The RNN component of the captioning network is trained on the captions in the COCO dataset. We’re aiming to train the RNN to predict the next word of a sentence based on previous words.For this we transform the captioned associated with the image into a list of tokenize words. This tokenization turns any strings into a list of integers.

Technologies Used

I have used PyTorch in this project. Then initialized the COCO API that will be used to obtain the data.

To process words and create a vocabulary, I have used the Python text processing toolkit: NLTK

Here, to train the model we can use "Devcloud" or any other external GPU.

**The libraries **that have been used in this project are:

scikit Image

Numpy

Scipy

PIL/ Pillow

OpenCV-Python

torchvision

matplotlib

Repository

https://github.com/Garima13a/Automatic-Image-Captioning