Blind Navigation System Using Artificial Intelligence

- 0 Collaborators

In order to provide the blind people hearable environment, this project focuses on the field of assistive devices for visual impairment people. It converts the visual data by image and video processing into an alternate rendering modality that will be appropriate for a blind user. The alternate modalities can be auditory, haptic, or a combination of both. Therefore, the use of artificial intelligence for modality conversion, from the visual modality to another. ...learn more

Project status: Published/In Market

Mobile, HPC, Networking, Internet of Things, Game Development, Artificial Intelligence

Overview / Usage

Imagine how is the life of a blind person, their life is full of risk, they can't even walk alone through a busy street or through a park. they always need some assistance from others. They are also curious about the beauty of the world, there is excitement to explore the world, and to be aware of what is happening in front of them. Even though they can find their own things without anyone's need. The predicted cases will rise from 36 million to 115 million by 2050 for blind peoples if treatment is not improved by better funding. A growing ageing population is behind the rising numbers. Some of the highest rates of blindness and vision impairment are in South Asia and sub-Saharan Africa. The percentage of the world's population with visual impairments is actually falling, according to the study. But because the global population is growing and more people are living well into old age, researchers predict the number of people with sight problems will soar in the coming decades. Analysis of data from 188 countries suggests there are more than 200 million people with moderate to severe vision impairment. That figure is expected to rise to more than 550 million by 2050.

Methodology / Approach

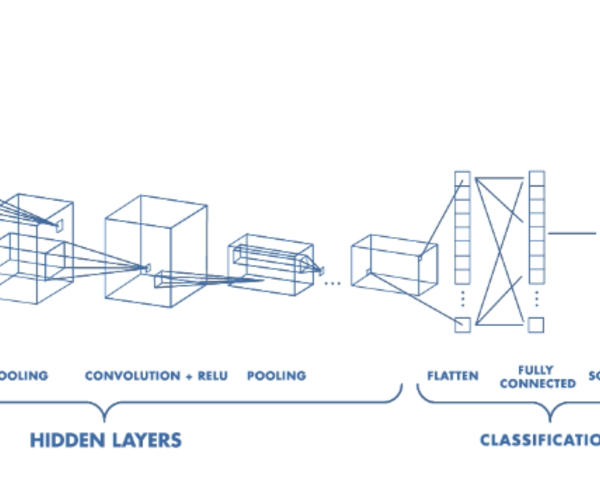

The goal of this research is to provide the better image processing using artificial intelligence. By using CNN image classifier, we predict the correct answer with more than 90% accuracy rate. By doing so we achieved the state-of-art result on the CIFAR-10 dataset. We also use the trained model with real-time image and obtained the correct label.

Technologies Used

This project contains three main parts, a raspberry pi 3 (powered by android things), camera and artificial intelligence. When the person presses the button on the device, the camera module starts to take a pictures and analyze the image using Tensorflow (open source library for numerical computation ) and detect what is that picture is about, and then using a speaker or headphone, the device will voice assist the person about that picture 1).

Repository

https://github.com/ashwanidv100/sample-tensorflow-imageclassifier