Coral Species Classification and Automated Annotation

- 0 Collaborators

We developed a CNN based classifier to automate point annotation method for coral species analysis from underwater images. ...learn more

Project status: Published/In Market

Robotics, Artificial Intelligence

Intel Technologies

Intel Opt ML/DL Framework

Overview / Usage

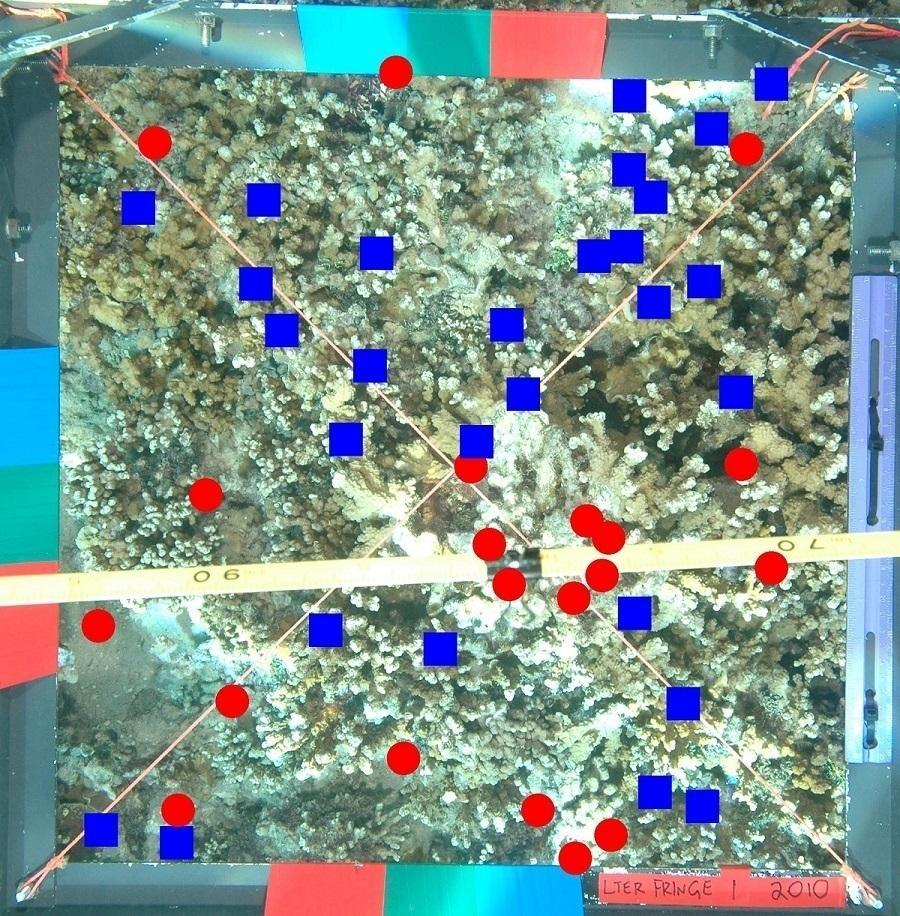

Classifying coral species from visual data is a challenging task due to significant intra-species variation, high inter-species similarity, inconsistent underwater image clarity, and high dataset imbalance. In addition, point annotation, the labeling method used for coral reef images by marine biologists, is prone to mislabeling. Point annotation also makes existing datasets incompatible with state-of-the-art classification methods which use the bounding box annotation technique. In this paper, we present a novel end-to-end Convolutional Neural Network (CNN) architecture, Multi-Patch Dense Network (MDNet) that can learn to classify coral species from point annotated visual data. The proposed approach utilizes patches of different scale centered on point annotated objects. Furthermore, MDNet utilizes dense connectivity among layers to reduce over-fitting on imbalanced datasets. Experimental results on the Moorea Labeled Coral (MLC) benchmark dataset are presented. The proposed MDNet achieves higher accuracy and average class precision than the state-of-the-art approaches.

Methodology / Approach

We propose a novel deep learning framework, Multi-Patch Dense Network (MDNet), for coral classification inspired by DenseNet by Huang et al. The benchmark dataset MLC follows point annotation technique. The point annotation technique suggests that the annotated point is located on an object; thus, the comparatively smaller patches 28x28 containing the annotated points in the center will best reflect the object. In practice, this idea in general holds true for most smaller patches. However, there is a number of annotated points that are not exactly on the object. Surprisingly, there even smaller patches where no object is present. On the other hand, larger patches 112x112 or 84x84 always contain the object it is annotated for. However, larger patches, in many cases, may contain several different species of coral and parts of the background (non-coral). In our empirical analysis, we observed complex spatial boundary among corals species, which is why, it is nearly impossible to find a patch size that will contain only the object, applicable for all points. Thus, it is imperative to consider both smaller and larger patches to extract useful information such as texture and shape.

The key idea proposed in this work is to start with a larger patch 112x112 and iteratively crop towards the center. As a result, the network can utilize the varying patch sizes to learn the texture and appearance of distinct coral species. Another important feature of the network is that it is densely connected among layers to reduce over-fitting.

Technologies Used

Tensorflow, Nvidia GeForce GTX 1080, Intel Neural Compute Stick (deployment in Aqua2 AUV for coral reef monitoring)