DL performance on Intel Hardware

- 0 Collaborators

Hands-on experimenting with the basics of Deep Learning using Intel Hardware and Intel optimized MKL and OpenVINO toolkit. Measuring and comparing the performance on different Intel compute nodes for both training and inference stages. ...learn more

Project status: Concept

Internet of Things, Artificial Intelligence

Intel Technologies

DevCloud,

Intel Python,

Movidius NCS,

OpenVINO,

Intel Integrated Graphics

Overview / Usage

This project is to explore different deeplearning algorithms using Intel DevCloud. The project is two fold; part 1 includes the model training making use of the MKL TensorFlow and Intel distribution of python*, part 2 is concerned with hardware optimization for inference on Intel compute nodes using the OpenVINO toolkit. The training phase includes comparing different CPU Optimization configurations, Hyperparameters selection, etc. Writing Jupyter Notebooks on how to build neural networks such as Convolutional networks, RNNs, LSTM, Adam, Dropout, BatchNorm, etc. Studying the benefits of Adaptive learning-rate optimizers (Adagrad, Adadelta, RMSprop and Adam). Using Transfer Learning to speed up your training process by utilizing things like edge detection that the previous training has already learned then you can fine tune the network to your data set.

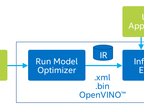

The inference phase would be to showcase the use of Intel Media SDK and OpenVINO toolkit for building basic CV applications; optimizing the models and deploying them using the inference engine. The inference performance of different intel hardware; CPU, GPU, Movidius and FPGA will be compared.

Methodology / Approach

We have used Intel Dev Cloud with OpenVINO, Python, OpenCV and Tensorflow

Will follow the methods in papers attached (see links) + Deeplearning.ai courses