YogAI

- 0 Collaborators

AI personal trainer using pose estimation to guide and correct a yogi. ...learn more

Project status: Under Development

Overview / Usage

When exercising at home, most people opt to tune into an exercise video and try to follow instructions as best as they can. However, the instructor in the video can't tell what the user is doing and can't provide useful feedback to help the student improve. Using state of the art computer vision models, we can create a virtual instructor that can respond to the student's movements and provide this useful feedback missing in generic exercise videos. We image this instructor to be interfaced in a smart mirror to resemble how a user would normally exercise. With a voice assistant on device, the student can easily start, stop, pause, and resume a class.

Methodology / Approach

We began by capturing images from a user through a smart mirror framed on a wall. By framing photos from the perspective of a large mirror on the wall rather than on the ground from a yoga mat upward, we could train models using yoga photos from the wild. These typically feature full body perspective from a distance at a height typical of a photographer.

The mirror is a ubiquitous gym tool because of the value of visual perspective when coordinating body motions. We wanted to take YogAI further than any existing commercial product by offering real time corrective posture advice using an intelligent assistant. Ready to train when you are and doesn't send your data to remote servers.

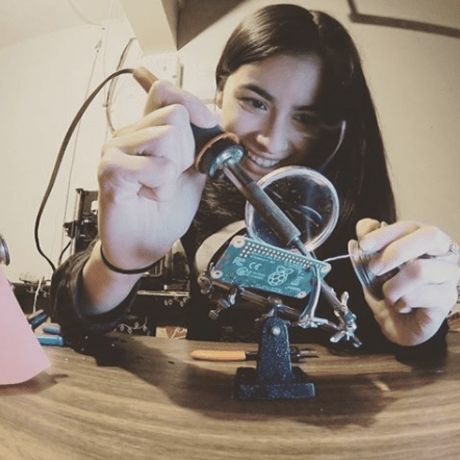

Making a smart mirror is simple enough: just need an old monitor, a raspberry pi, and a one-way mirror, see how we built it here. We add a camera and microphone to support VUI and a visual analysis of the user, all taking place on-device.

To evaluate our concept, we begin by gathering images of Yoga poses with an image search for terms like: 'yoga tree pose', 'yoga triangle pose', etc. We chose yoga because the movements are relatively static compared to other athletic maneuvers, this makes the constraints on frame rate of inference less demanding. We can quickly filter out irrelevant photos and perhaps refine our queries to build a corpus of a couple thousand yoga pose images.

We'd love to achieve the speed of a CNN classifier, however, with only a few thousand images of people in different settings, clothing, positions, etc. we are unlikely to find that path fruitful. Instead, we turn to pose estimation models. These are especially well-suited to our task of reducing all the scene complexity down to the pose information we want to evaluate. These models are not quite as fast as classifiers, but with tf-lite we manage roughly 2.5 FPS on a raspberry pi 3.

Pose estimation gets us part way. To realize YogAI, we need to add something new. We need a function that takes us from pose estimates to yoga position classes. With up to 14 body keypoints, each of our couple thousand images can be represented as a vector in a 28-dimensional real linear space. By convention, we will take the x and y indices of the mode for each key point slice of our pose estimation model belief map. In other words, the pose estimation model will output a tensor shaped like (1, 96, 96, 14) where each slice along the final axis corresponds to a 96x96 belief map for the location of a particular body key point. Taking the max of each slice, we find the most likely index where that slice's keypoint is positioned relative to the framing of the input image.

This representation of the input image offers the additional advantage of reducing the dimensionality of our problem for greater statistical efficiency in building a classifier. We regard the pose estimation process as an image feature extractor for a pose classifier based on gradient boosting machines, implemented with XGBoost.

By creating a helper function to apply random geometric transformations directly to the 28-dim pose vector, we were able to augment our dataset without necessitating costly pose estimation runs on more images. This observation helped us to quickly take our training set from a couple thousand vectors to tens of thousands by randomly applying small rotations, flips, translations to a sample vector. Then we were able to quickly demonstrate our approach by training a gradient boosting machine.

It was natural to consider how we might use pose estimates over time to get a more robust view of a figures position in the photo. Having built a reasonable pose classifier, this also begs the question how we might generalize our work to classifying motion.

Our first idea here was to concatenate the pose vectors from 2 or 3 successive time steps and try to train the tree to recognize a motion. To keep things simple, we start by framing a desire to differentiate between standing, squatting, and forward bends (deadlift). These categories were chosen to test both static and dynamic maneuvers. Squats and Deadlifts live on similar planes-of-motion and are leg-dominant moves though activating opposing muscle groups.

Our gradient boosting machine model seemed to lack the capacity to perform reasonably. We decided to apply LSTMs to our sequence of pose vectors arranged in 28xd blocks, sweeping d in {2, 3, 5}. After some experiementation, we determined that 2 LSTM blocks followed by 2 fully connected layers on a 28x5 input sequence yielded a reasonable model.

A big goal for YogAI is to direct a yoga workout while offering corrective posture advice. Now we have a viable approach to the fundamental problem of posture evaluation/classification. We might start to ask questions like: can we adapt/control the workout to target a heart range adjusting for skill level, or optimizing for user satisfaction?

Technologies Used

tensorflow, tflite, keras, XGBoost, snips.ai, flite, raspberry pi, web camera, microphone

Repository

https://github.com/smellslikeml/YogAI